The secret word for the day, boys and girls, is “routers”.

But first, a couple of pictures for my great and good friend Borepatch:

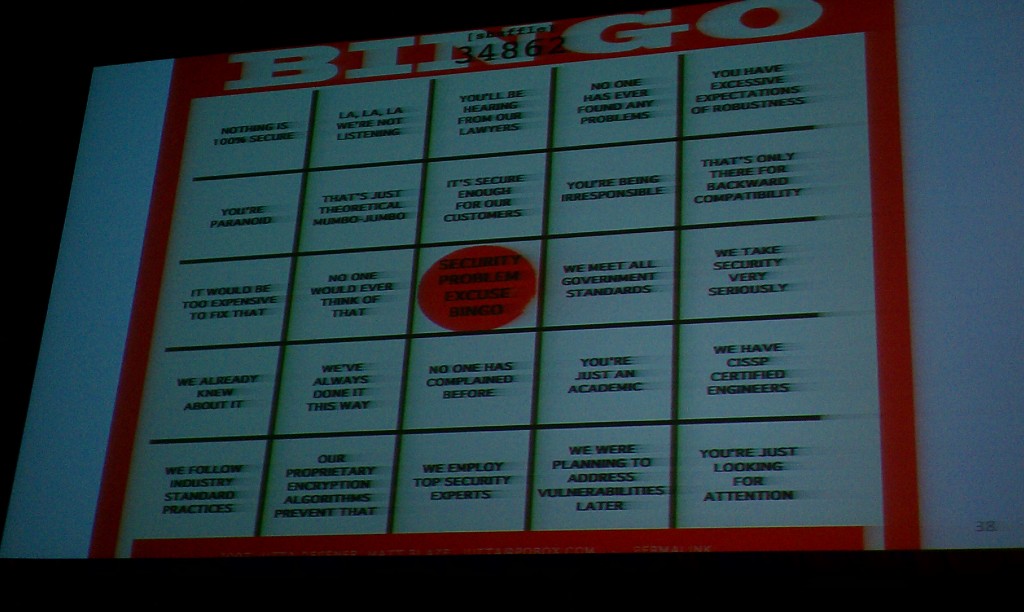

The Matt Blaze Security Bingo Card. (I hope folks can read it: I took that with a cell phone camera from the front row, so I didn’t have a great angle on it.)

And:

A gentleman in the hallway was kind enough to let me take a photo of his DEFCON Shoot shirt.

Speaking of Matt Blaze…

“SIGINT and Traffic Analysis for the Rest of Us” presented by Matt Blaze and Sandy Clark, and crediting a host of other folks.

For the past few years, Blaze and company have been working on APCO Project 25, or P25 for short. P25 is planned to be the next generation of public safety radio, and is intended to be a “drop-in” replacement for analog FM systems. Cryptographic security is built into P25: it uses symmetric algorithms and supports standard cryptographic protocols. All of this sounds great.

But there are a whole bunch of problems with this.

Encryption in P25 doesn’t work very well a significant portion of the time. There are user interface issues; on some radios, the “crypto” switch is in an obscure location, and the display doesn’t make it clear if encryption is on or off. Keys can’t be changed in the field; changing keys requires loading the radio in advance using a special device, or sending keys over the air (“Over The Air Rekeying”, or “OTAR”, which sometimes doesn’t work).

One important point is that the “sender” makes all the decisions: whether the traffic is encrypted, what encryption mode is used, what key is used, etc. The “receiver” doesn’t get to decide anything. If the “sender” sends in cleartext, either deliberately or by mistake, the “receiver” decodes it, automatically and transparently to the user. If the “sender” sends an encrypted message, the “receiver” first checks to make sure it has the proper key, then either decrypts the message or ignores it (if the “receiver” doesn’t have the key).

I feel like I am cheating a little here, but even Matt Blaze at this point in his talk recommended going and reading the group’s paper from last year, “Why (Special Agent) Johnny (Still) Can’t Encrypt: A Security Analysis of the APCO Project 25 Two-Way Radio System” for additional background.

But wait, there’s more! We have encryption, but do we have authentication? Do we know that the radios on our network are actually valid radios? Heck no! The radios transmit a “Unit ID” which is not authenticated, and which is never encrypted, even if the radio has encryption turned on. Just knowing the unit IDs lets you do some interesting stuff: you could, for example, set up two radios, do some direction finding on the received signals with the user IDs, and build a map of where the users are.

Even better: if you send a malformed OTAR request, the radios treat it like a UNIX “ping” and respond back with their Unit ID, even if they’re idle, and without the user ever knowing.

More: P25 uses aggressive error correction. But there’s a hole in the scheme; you can jam what’s called the “NID”, which is part of the P25 transmission, and render the transmissions unreadable. The Blaze group actually built a working jammer by flashing custom firmware onto the “GirlTech IM-Me”. (That was the cheapest way to get the TI radio chip they wanted to use.) You could use this to jam the NID in encrypted P25 traffic only, thus forcing cleartext on the users…

And even more: the basic problem with P25 and cryptographic security is usability. Every time an agency rekeys, someone is without keys for a period of time. Blaze mentioned the classic paper, ““Why Johnny Can’t Encrypt: A Usability Evaluation of PGP 5.0” and pointed out that many of the mistakes mentioned in that paper were repeated in designing P25.

How bad is the keying problem? Bad enough that agencies frequently transmit in cleartext, due to key management issues. (“NSA Rule Number 1: Look for cleartext.”) How frequently? Blaze and his group, for the past several years, have been running a monitoring network in several (unnamed) cites, recording cleartext P25 traffic and measuring how often this happens. About 20-30 minutes per day, by their estimate, of radio traffic is transmitted in unintended cleartext. And that traffic can contain sensitive information, like the names of informants.

Even if most of the traffic is encrypted, remember that the Unit IDs aren’t. So you’re getting some clear metadata traffic, which at the very least is useful for making inferences about what might be going on. (Zendian Problem, anyone?)

(If you’re monitoring P25 traffic, according to Blaze, the phrase you want to look for is “Okay, everyone, here’s the plan.”)

And what is the P25 community response to this? According to Blaze, the Feds have been very responsive and appreciate him pointing out the problem. The P25 standards people, on the other hand, claim Blaze is totally wrong, and that the problem is with the stupid users who can’t work crypto properly.

(This entry on Matt Blaze’s blog covers, as best I can tell, almost everything that was in his presentation. I haven’t found a copy of the actual presentation yet, but this should do to ride the river with.)

So it is getting late here, and I have to catch a plane early-ish in the morning. I think what I’m going to do is stop here for now, and try to get summaries of the three router panels up tomorrow while I’m waiting for my flight.